Codphish - ML-Based Prediction Service on Google Cloud

Codphish - ML-Based Prediction Service on Google Cloud

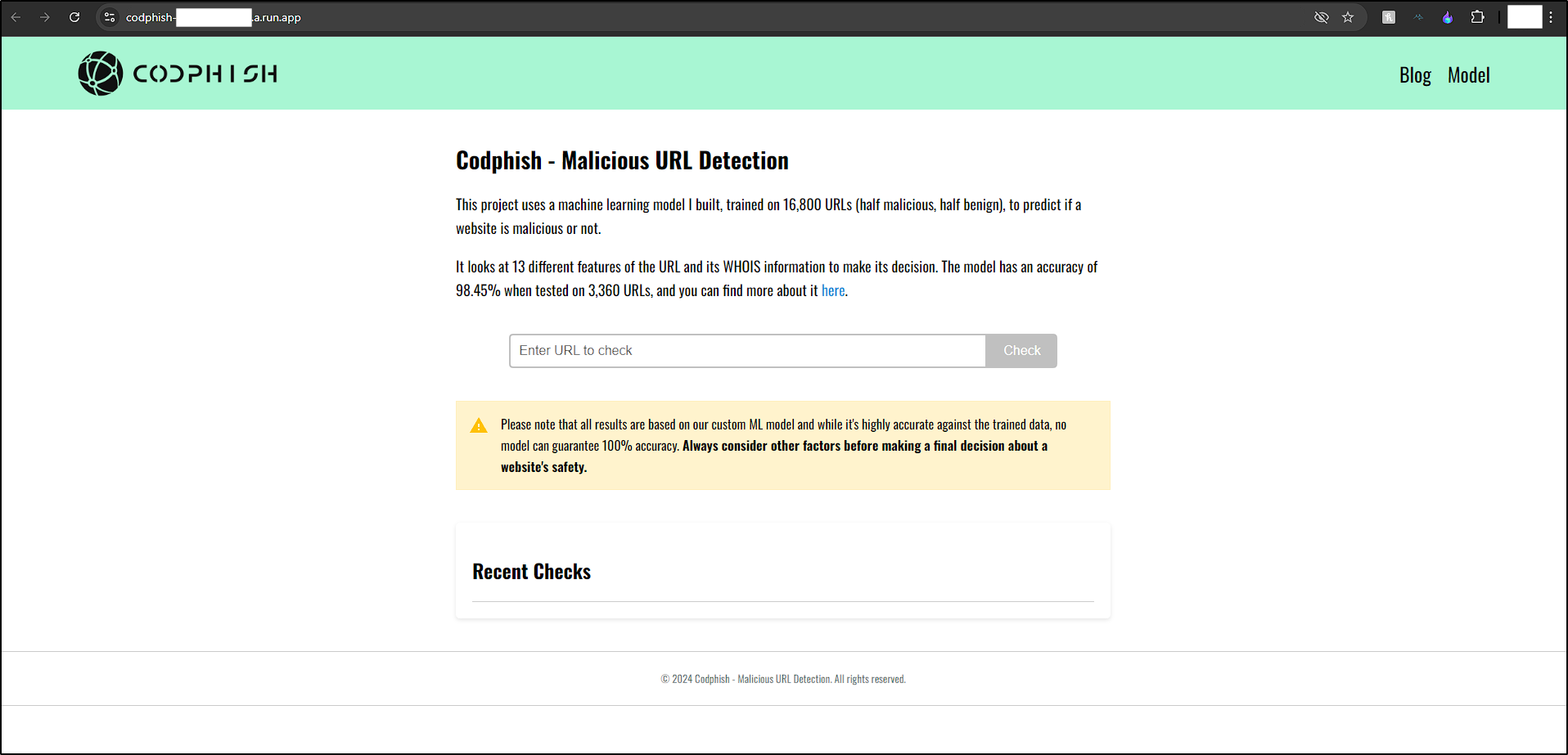

To enhance my “cloud skills,” I signed up for Google Cloud’s free trial, which offers $300 or three months to explore their services. To make the most of the ML model developed earlier, I used Google services to host this ML model on a public website, allowing everyone to try it: “codph.mcfajao.com”.

The website is currently down as I have exhausted the $300 credit provided by Google Cloud. If you didn’t get a chance to try it out, check the video below.

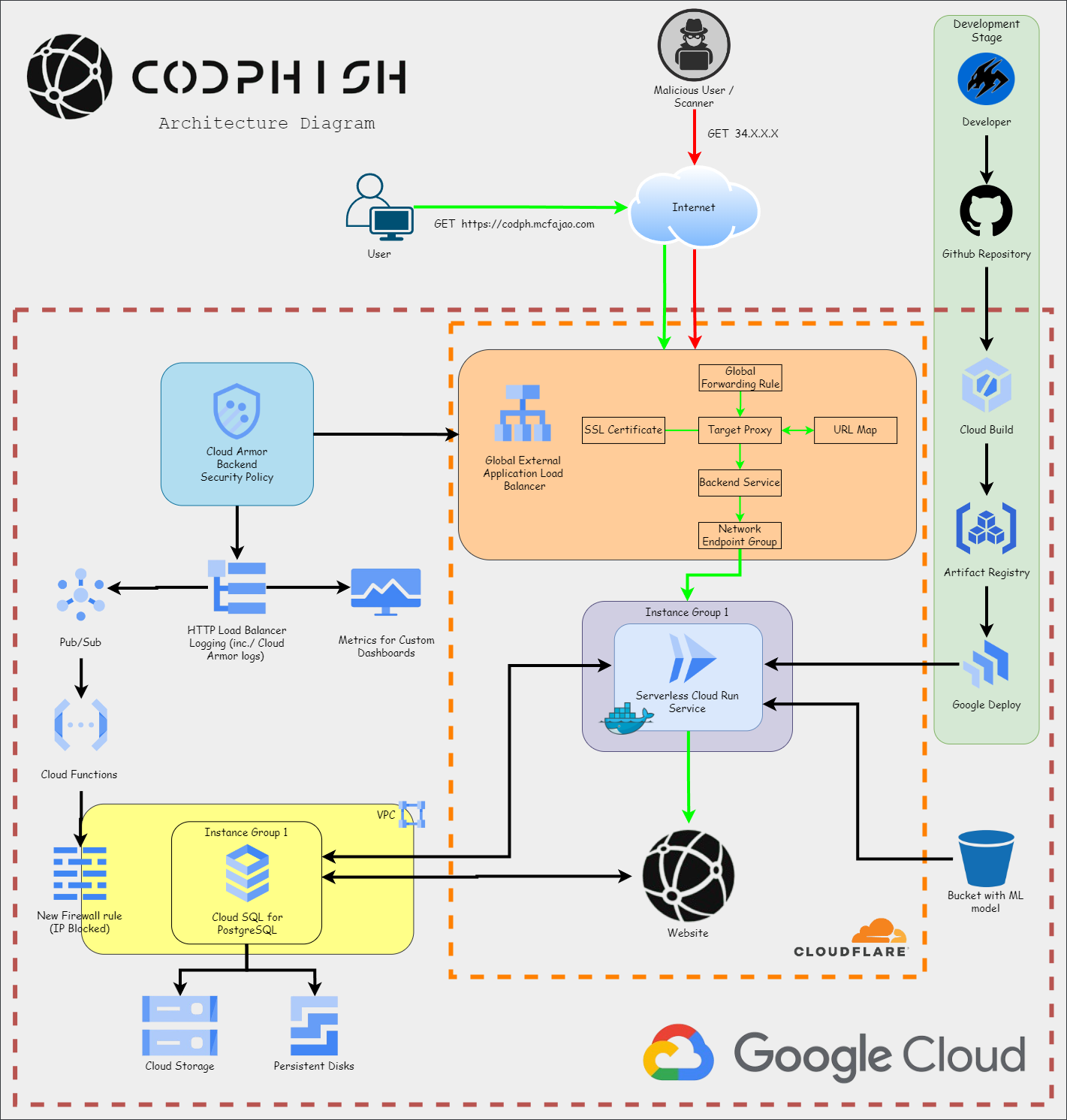

Architecture

Creation Steps

Development Process

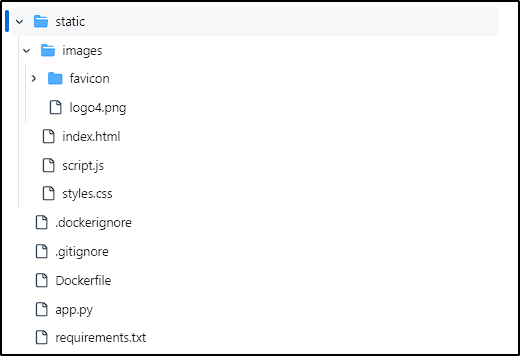

Github Repo - Website Structure

First, we need to create our app.py, which will be responsible not only for extracting the URL features but also for integrating various Google Cloud components such as Databases, Buckets, and Users. Additionally, we used the Python library “validators” to ensure the user input is a valid URL:

1

2

3

# Portion extracted from app.py

if not validators.url(url):

return jsonify({'error': 'Invalid URL'}), 400

Next, since we will use Docker to create an image for our service on Google Cloud, we need to create a Dockerfile in our project root and a requirements.txt with all the necessary libraries.

Our repository structure should look like this:

The static folder holds the HTML, CSS, and JavaScript of our website. You can check all the code here.

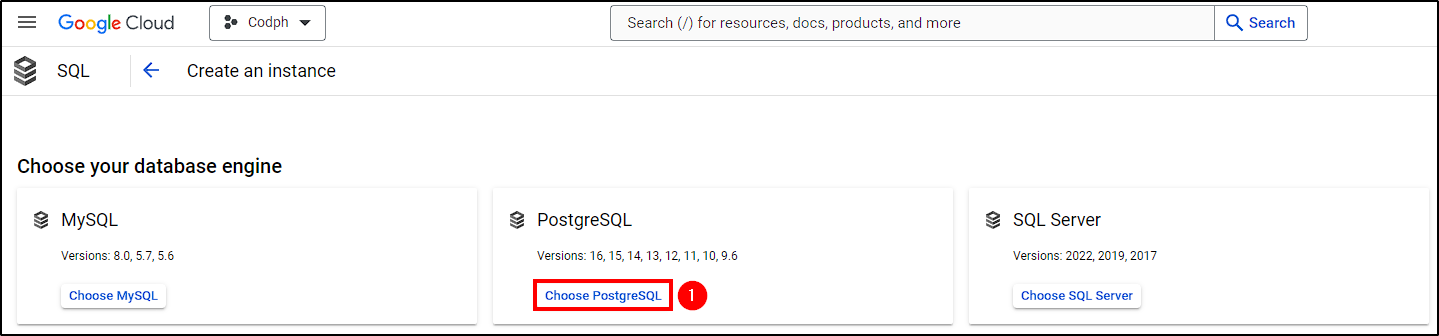

Cloud SQL Instance

Before creating our Docker image, we need to deploy a Cloud SQL instance and database for our app.py to use. But before that, we need to enable the following APIs that we will be using in the future:

- Compute Engine API

- Cloud Run Admin API

- Cloud Functions API

- Serverless Integrations API

- Cloud Logging API

- Cloud Build API

- Artifact Registry API

- Cloud Pub/Sub API

- Identity and Access Management (IAM) API

- Cloud SQL Admin API

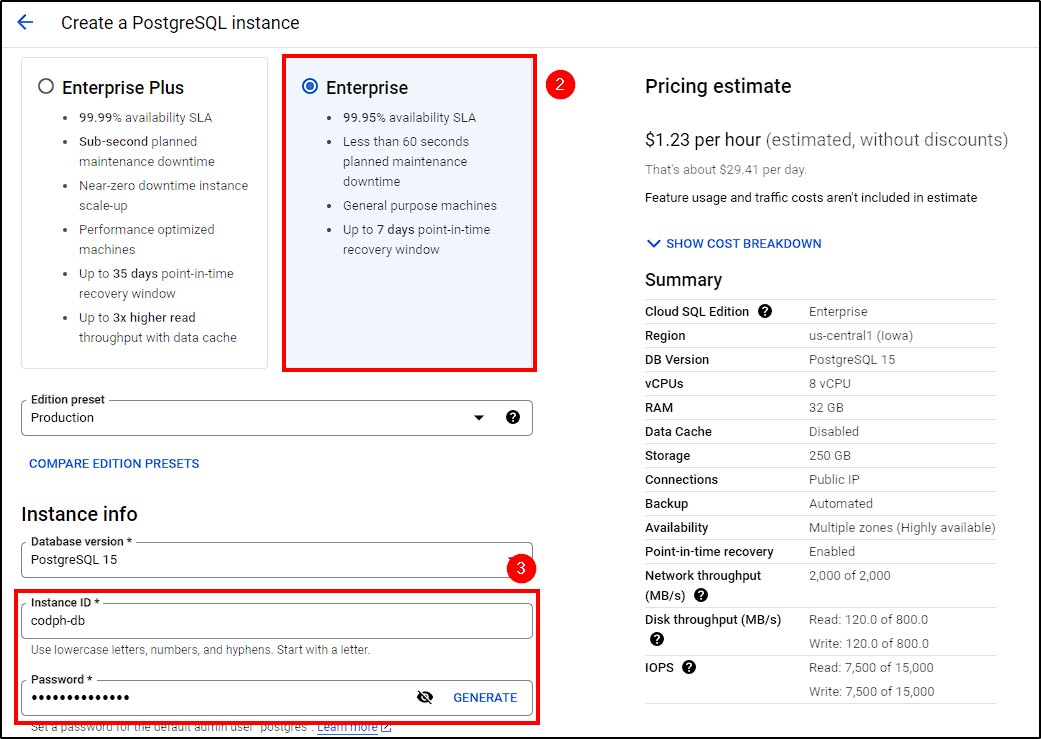

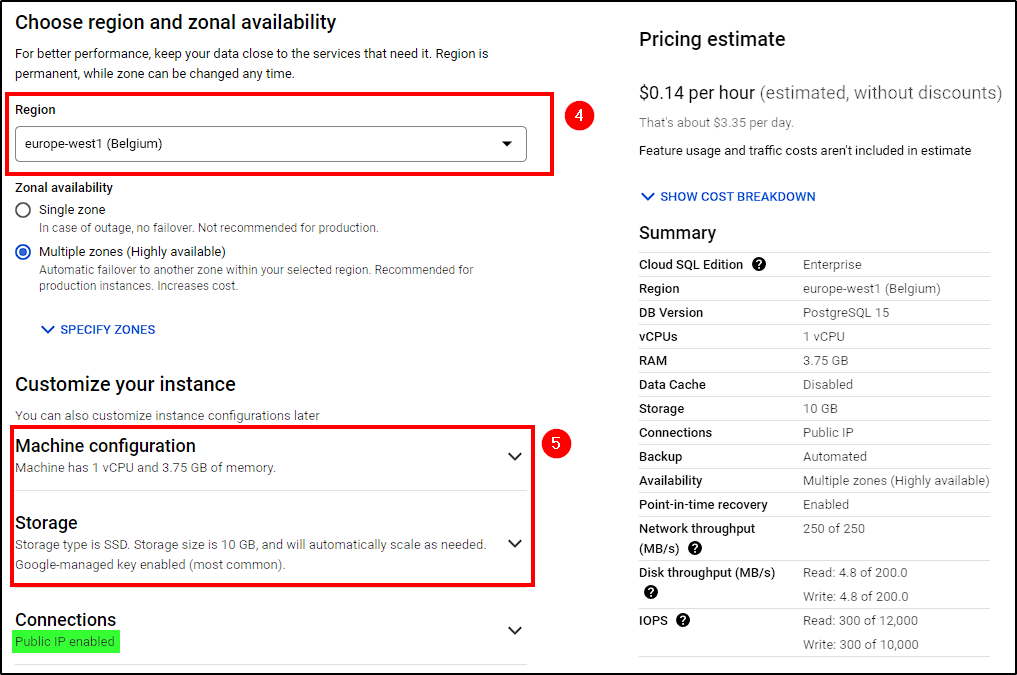

Next, sign in to Google cloud, click on the hamburger menu, and go to Cloud SQL > Create Instance. Create a PostgreSQL instance following these steps:

- Select the Enterprise option

- Name the instance and set a password

- Choose a region (we used

europe-west1) - Select the lowest Machine Configuration and Storage options

- Ensure the instance is reachable by Public IP

Create the instance and wait for it to be ready.

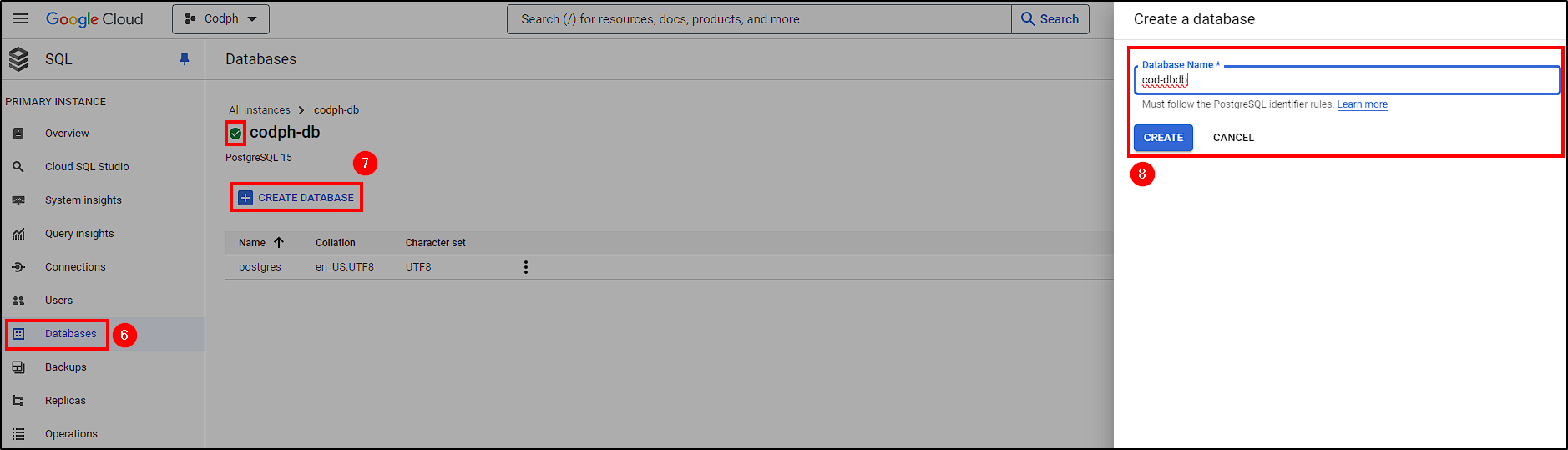

Next, create a database and a user for Codphish. Navigate to Database > Create Database and name it. For the user, go to Users > Add User Account > Built-in Authentication, name it, and set a strong password.

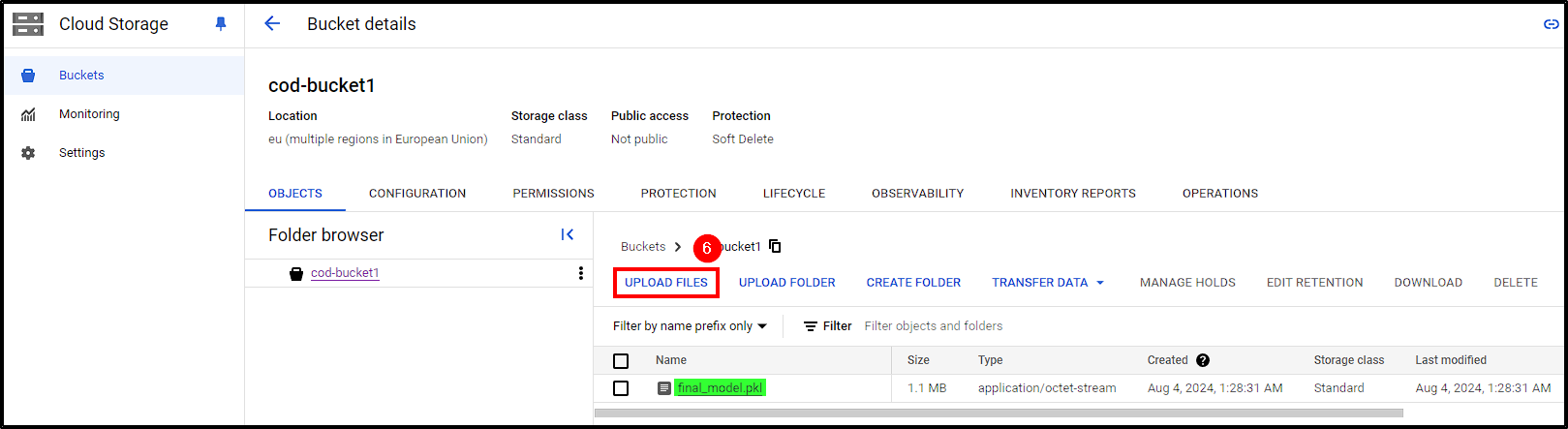

Cloud Storage Bucket

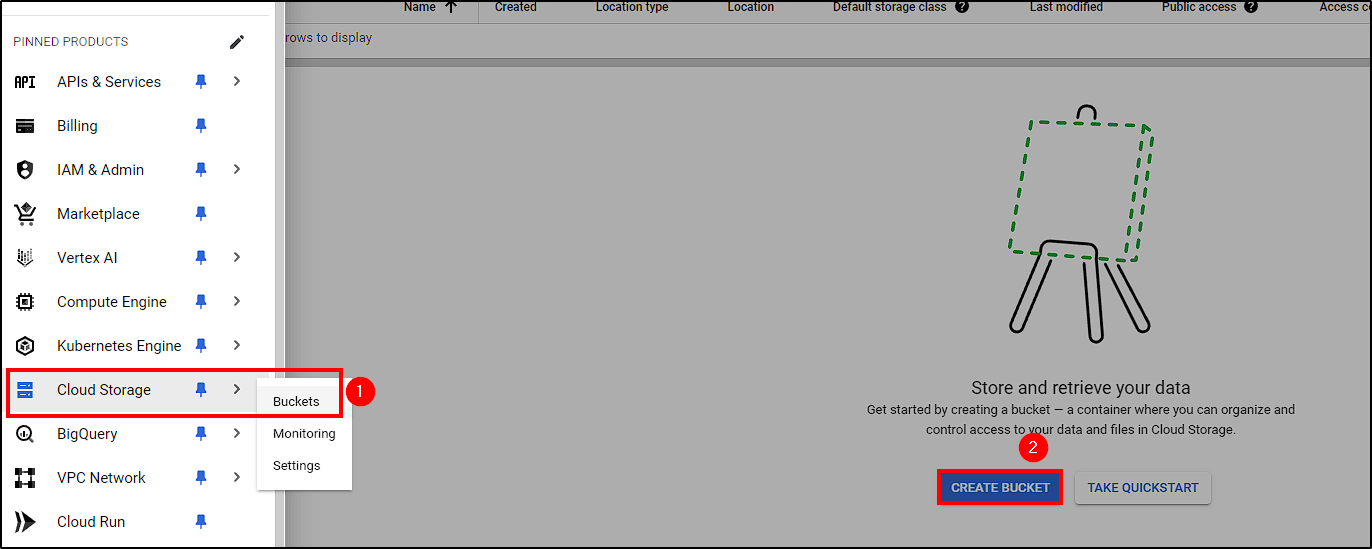

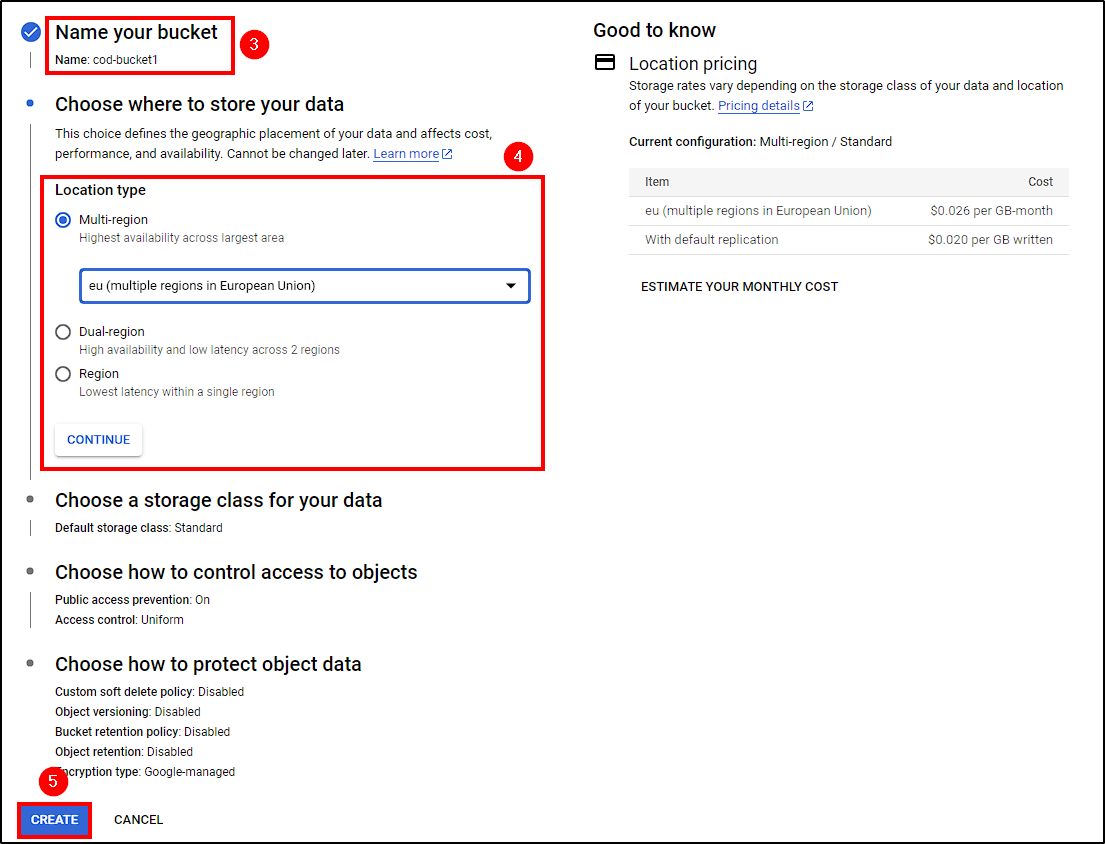

The final prerequisite before building our image is to create a bucket to upload our ML model. Navigate to Cloud Storage > Buckets > Create Bucket, name the bucket and create it. After successfully creating it, just upload the ML model.

Building our Docker Image

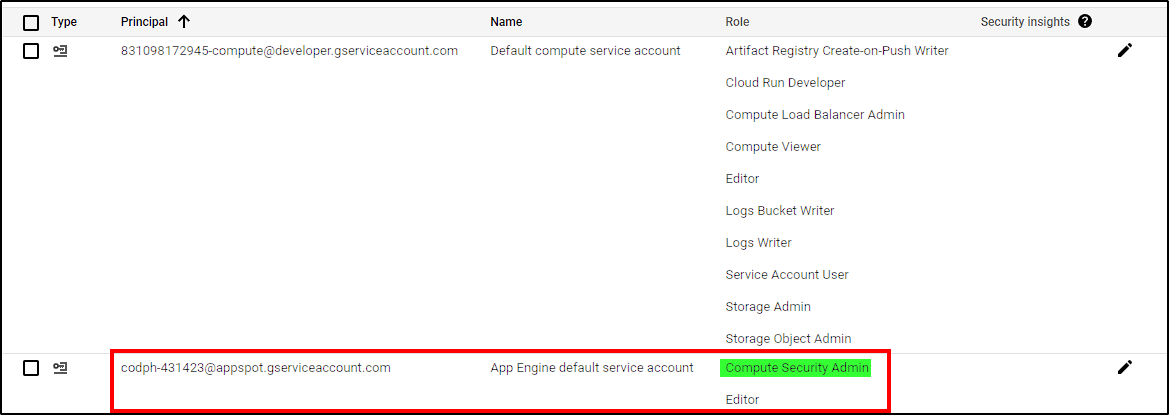

To build and deploy our image, as well as some future functions, we will use a service account with some specific roles:

- Artifact Registry Create-on-Push Writer

- Cloud Run Developer

- Compute Load Balancer Admin

- Compute Viewer

- Editor

- Logs Bucket Writer

- Logs Writer

- Service Account User

- Storage Admin

- Storage Object Admin

Initially, the account only has the Editor role. To assign the required roles, go to IAM & Admin > IAM > (Pencil Icon) and add each role.

[!image_009]

Now, open a new Google Cloud Shell and run the following commands (ensure you are in the correct project):

1

2

3

4

5

# Cloning teh repo that contains the app files

git clone «github_repo»

# Build image

gcloud builds submit --tag gcr.io/«Project_ID»/codphish

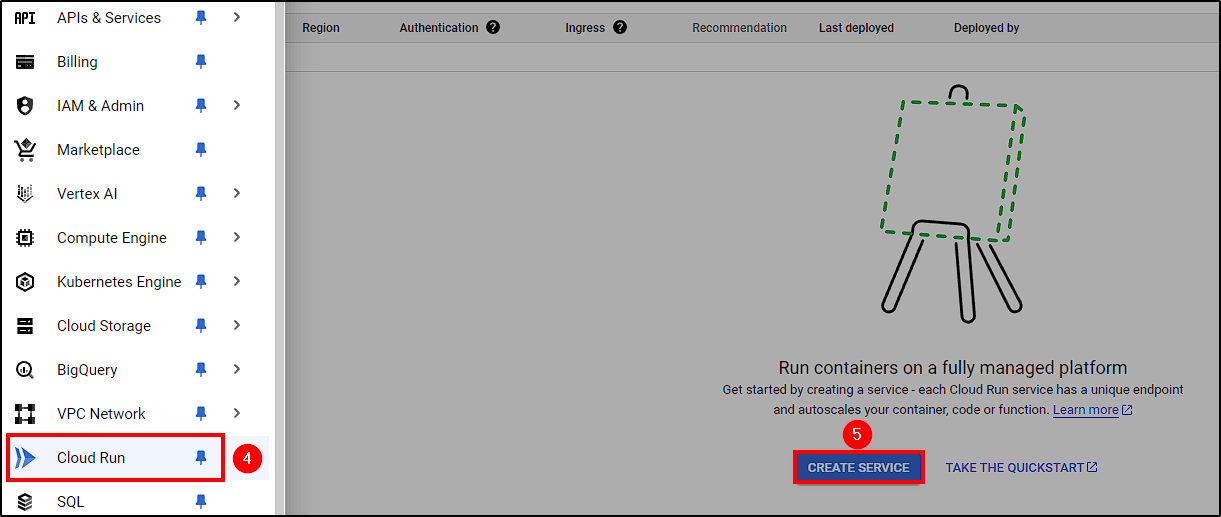

Deploying our Cloud Run Service

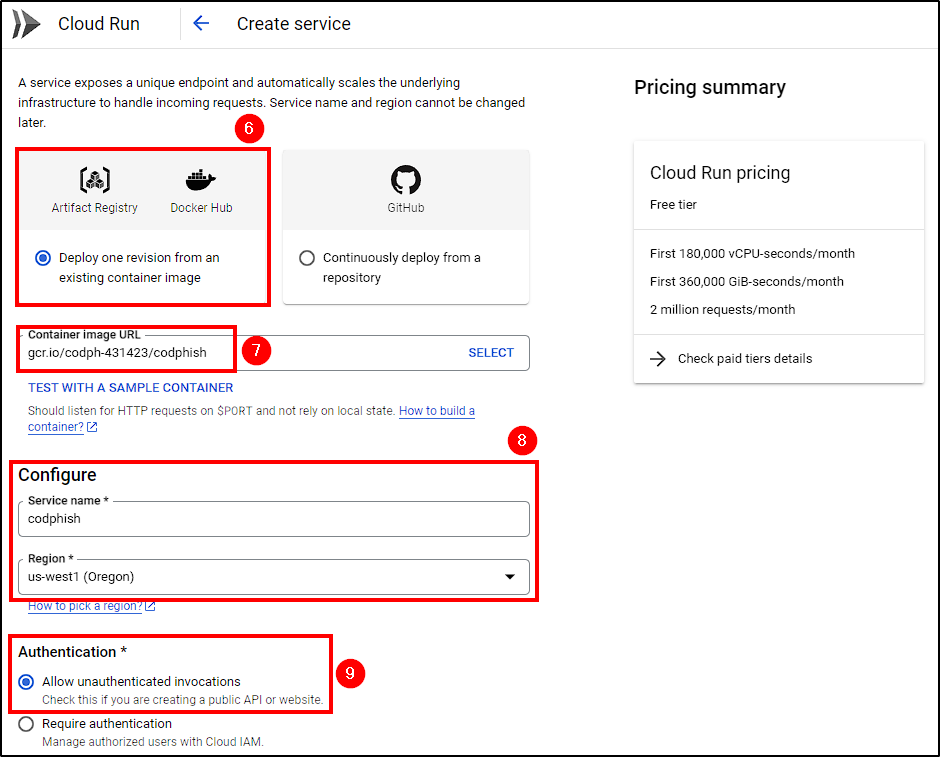

With all components ready, go to Cloud Run > Create Service and choose the following options:

- Deploy an existing container image

- Insert your Image URL

- Name your service and select the region

- Allow all invocations

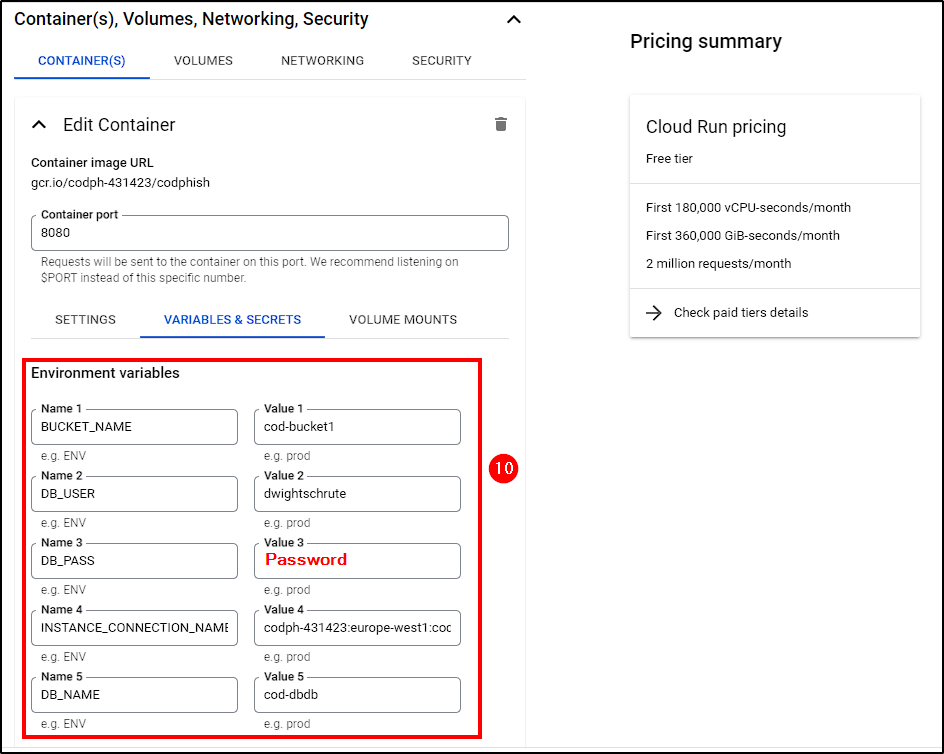

To enable our app to access the database and bucket, create these environment variables with the appropriate values:

- BUCKET_NAME: Name of bucket created earlier

- DB_USER & DB_PASS: SQL User information

- DB_NAME: Database name

- INSTANCE_CONNECTION_NAME: Check your SQL instance details for this info.

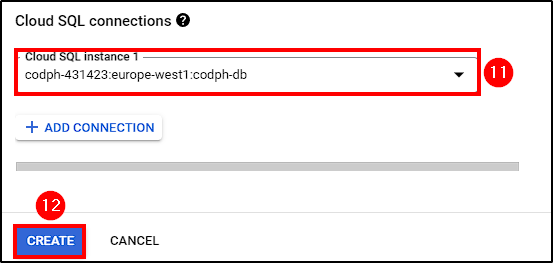

Finally, select the Cloud SQL connection and create the service.

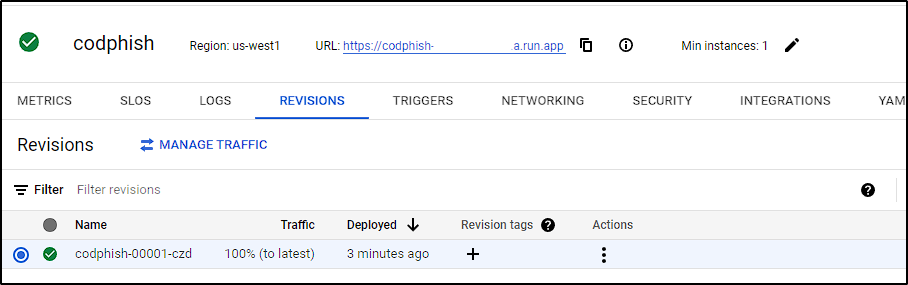

After completion, we can access our website using the service URL.

Load Balancer and Cloud Armor

Currently, we can access our website using the service URL, but is it safe? As it stands, our website is vulnerable to DDoS attacks (application and network layer) and web app attacks. To mitigate these, we can deploy a Load Balancer with a Cloud Armor security policy, which helps prevent a broad range of attacks and logs them so we can monitor any potential threats.

Cloud Armor Security Policy

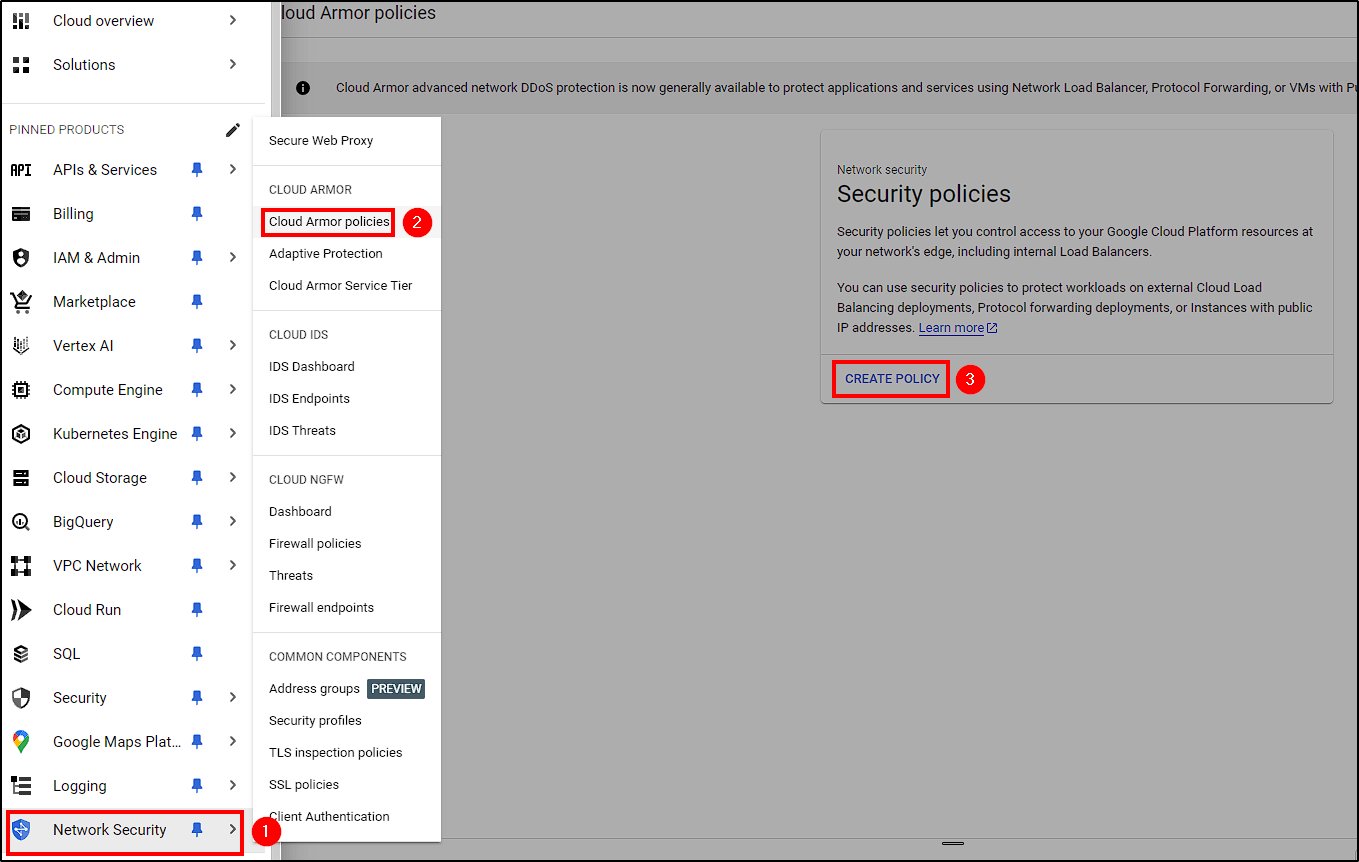

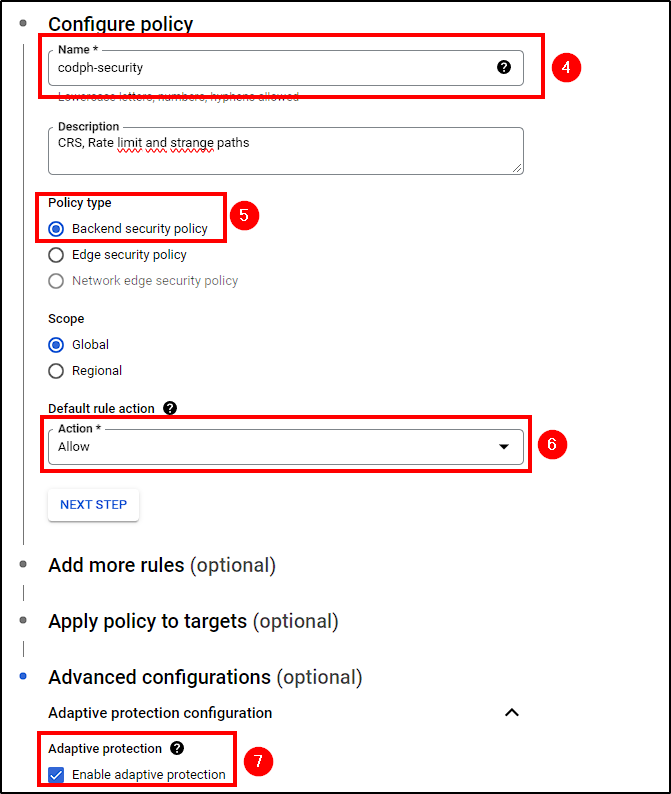

Let’s start with Cloud Armor. First, go to Network Security > Cloud Armor Policies > Create Policy and follow these configurations:

- Name your policy and give it a description (optional)

- Select

Backend security policy - Change the action to Allow

- In Adavanced Configurations, enable Adaptive Protection (DDoS protection)

- Select

Create

Now that our security policy is created, we can add rules to deny access, rate-limit access, etc. After some time, I received requests from scanners trying different paths in search of loopholes. To counter this, I created rules to respond with a 404 error whenever they get triggered.

Google Cloud has some preconfigured rules that we can use to prevent web app attacks. You can learn more about it here.

1

2

# Rate limit rule creation using Cloud Shell

gcloud compute security-policies rules create 5500 --security-policy=codph-security --expression="true" --action=rate-based-ban --rate-limit-threshold-count=100 --rate-limit-threshold-interval-sec=60 --ban-duration-sec=3600 --conform-action=allow --exceed-action=deny-404 --enforce-on-key=IP

Google Cloud Load Balancing - Custom Domains

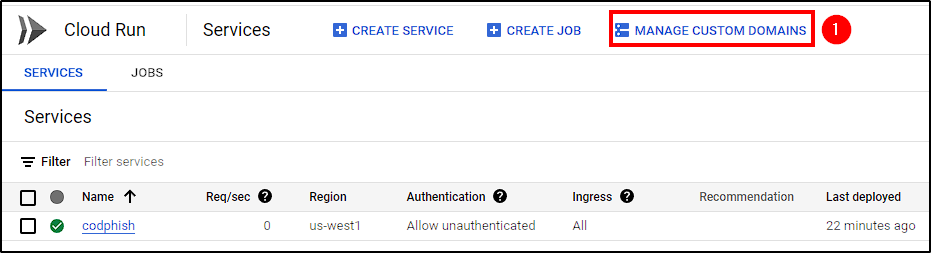

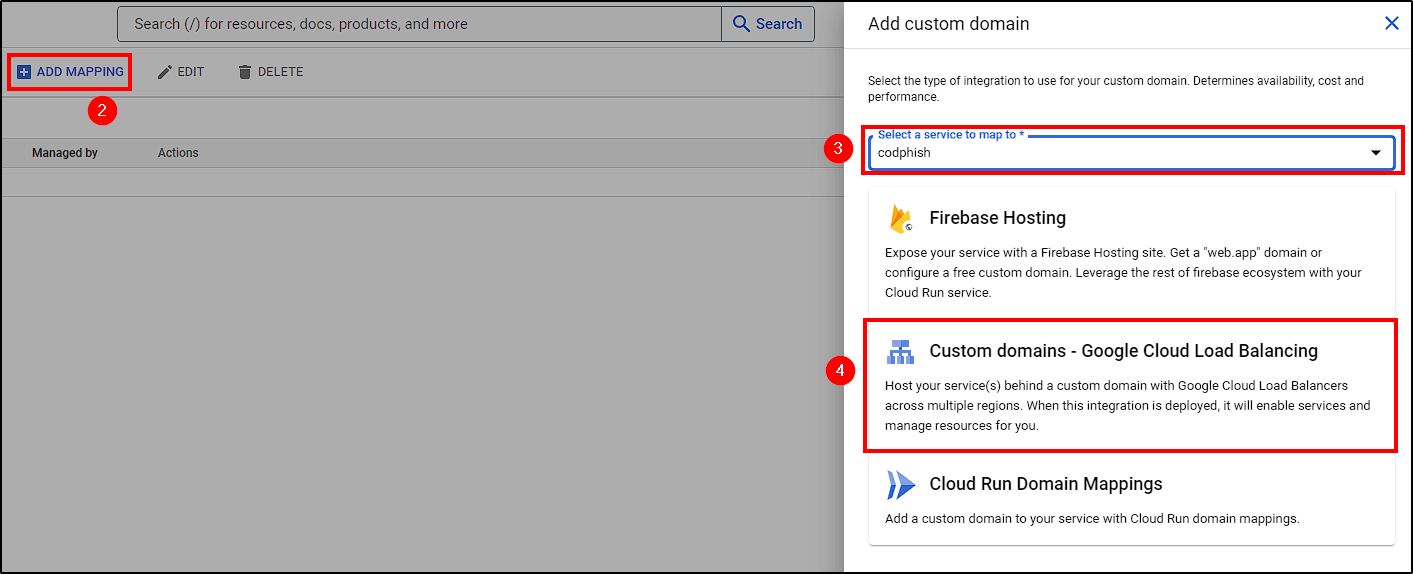

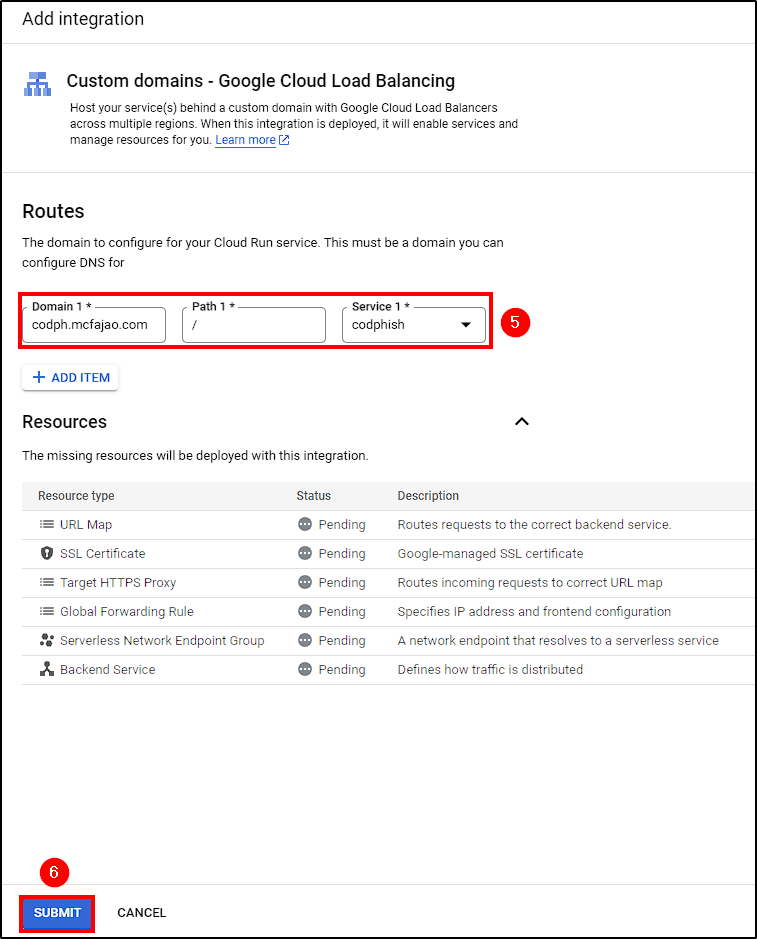

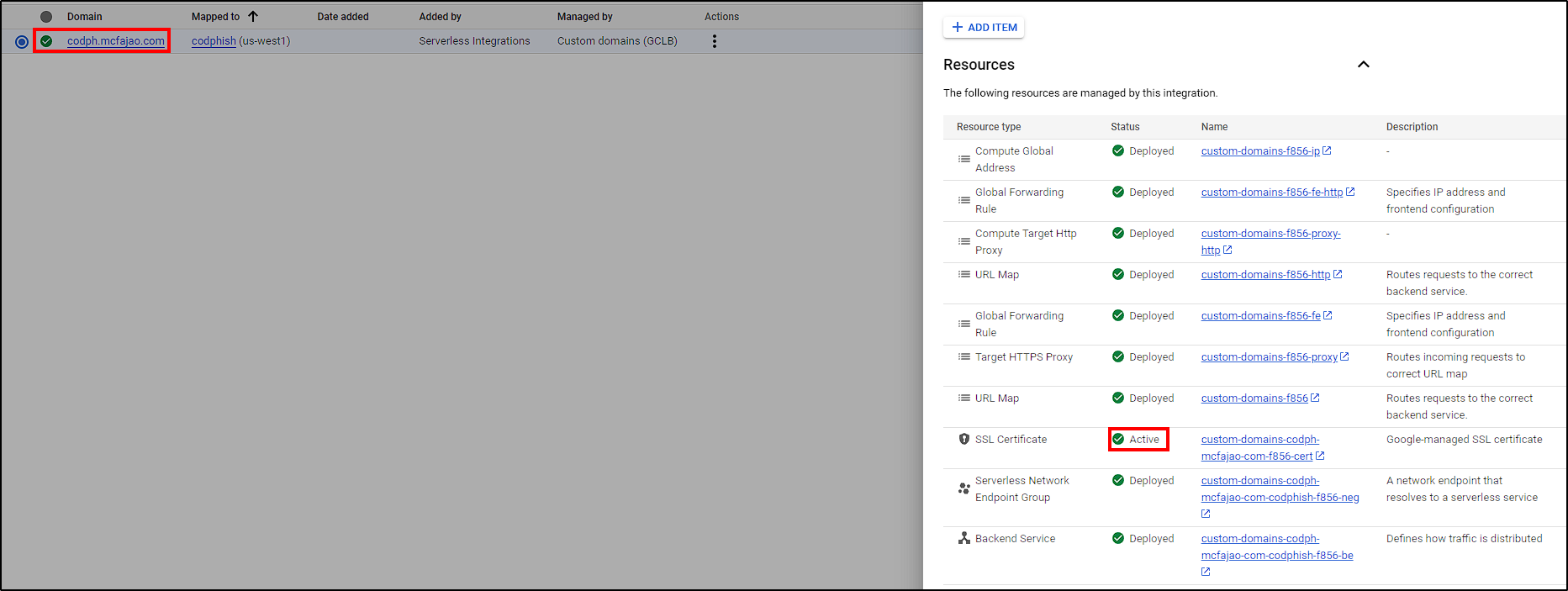

As we can see, our security policy is not yet attached to anything. We could create a load balancer and attach it, but instead, we will use Cloud Run’s Domain Mapping capabilities to host our services behind a custom domain with Load Balancers and all their components (HTTP Proxy, URL Map, SSL Certificate, NEG, Forwarding Rule, and Backend Service).

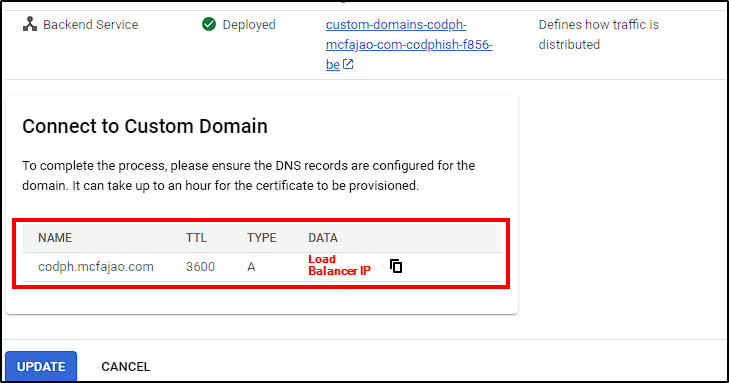

To do this, go back to Cloud Run > Manage Custom Domains, select Your Service Name > Custom domains - Google Cloud Load Balancing, enter the desired domain (“codph.mcfajao.com” in our case) and click Submit.

This way, all those components will be created by Google Cloud.

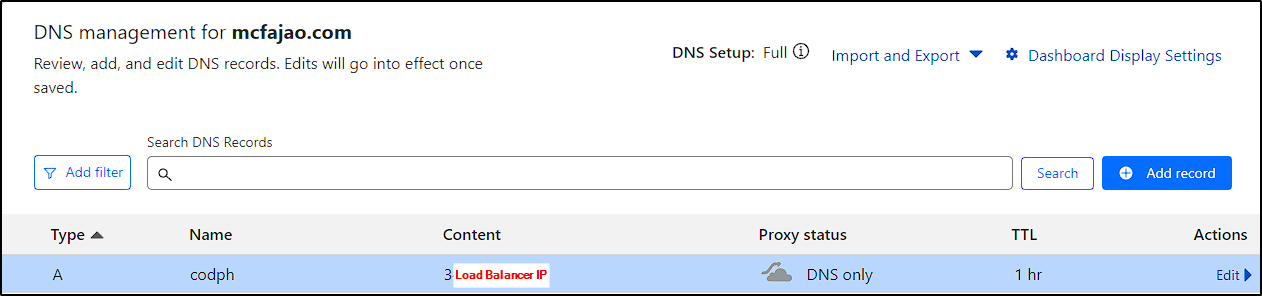

During this process, an IP will be assigned to the Load Balancer, which we will use to create a DNS record. In our case, we just need to log into Cloudflare and add the A record with this IP.

Ensure CloudFlare Proxy is disabled! We will use load balancer logs to block IPs, so we need to see the actual threat actor IP.

After some time (DNS propagation can take 24-48 hours), your Load Balancer will be ready to receive requests.

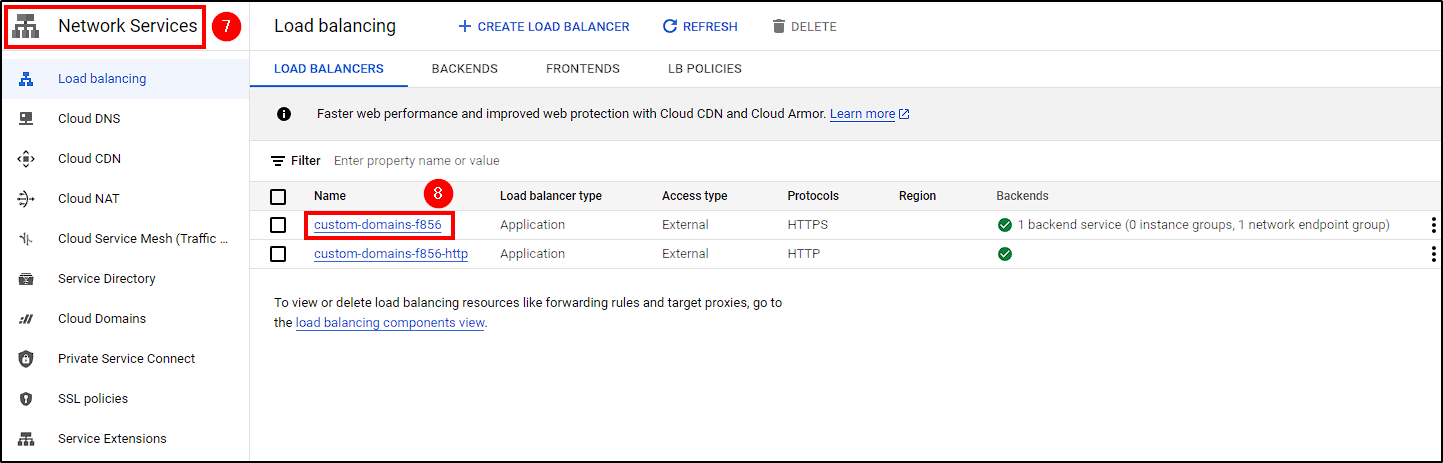

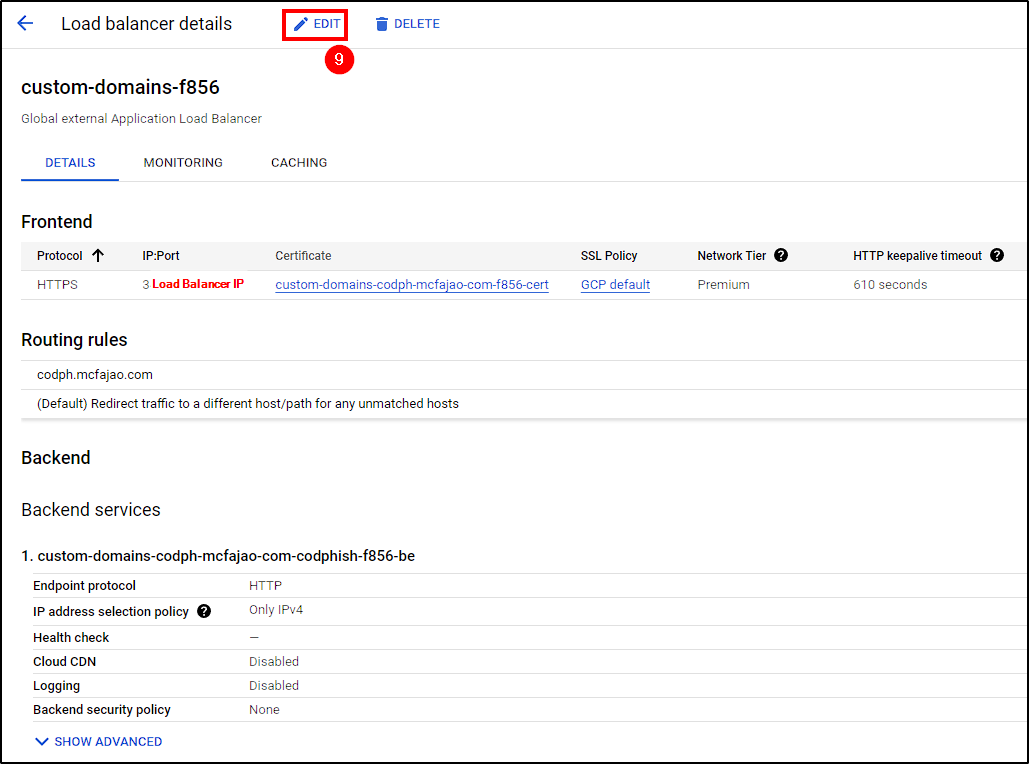

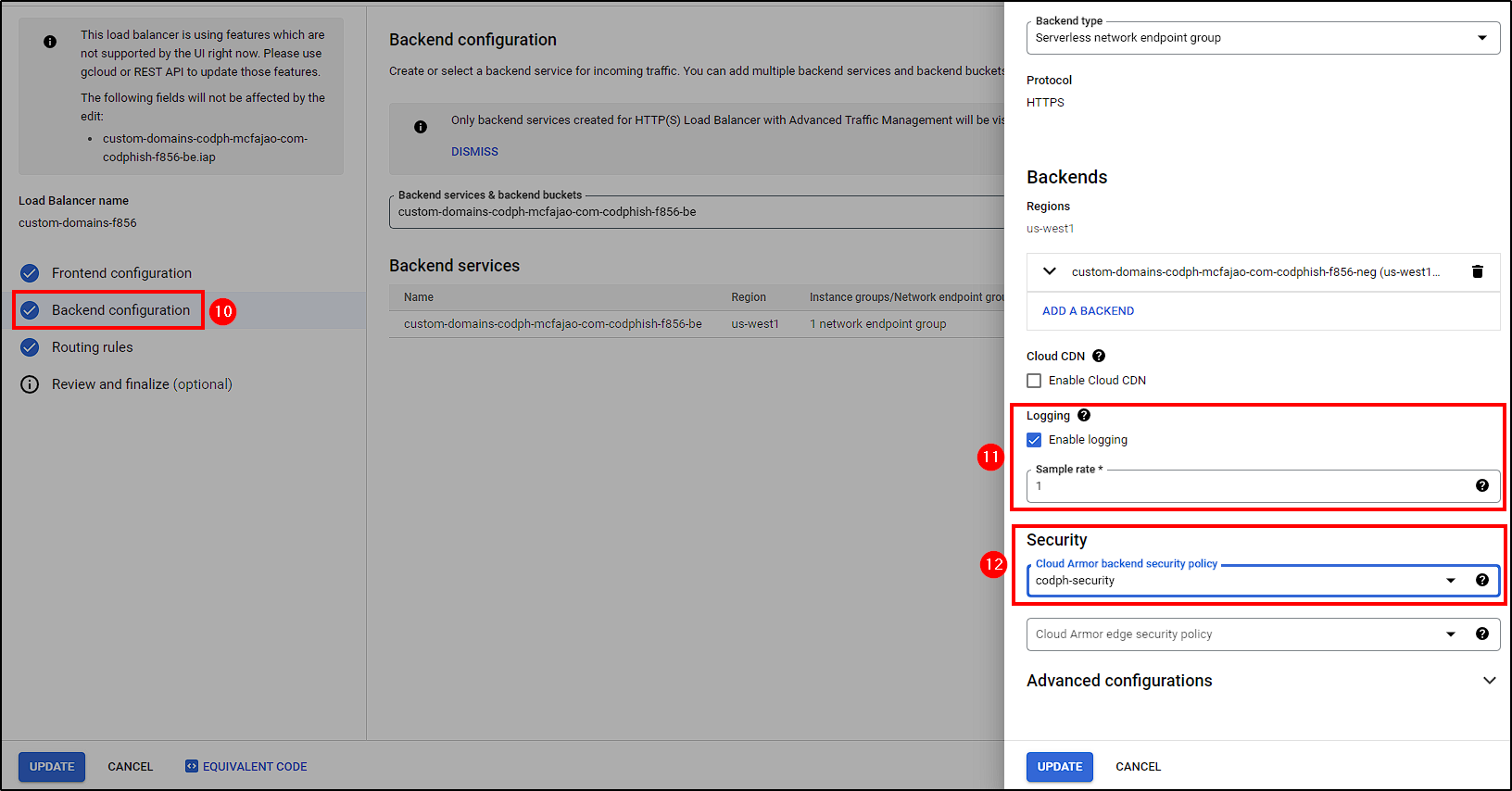

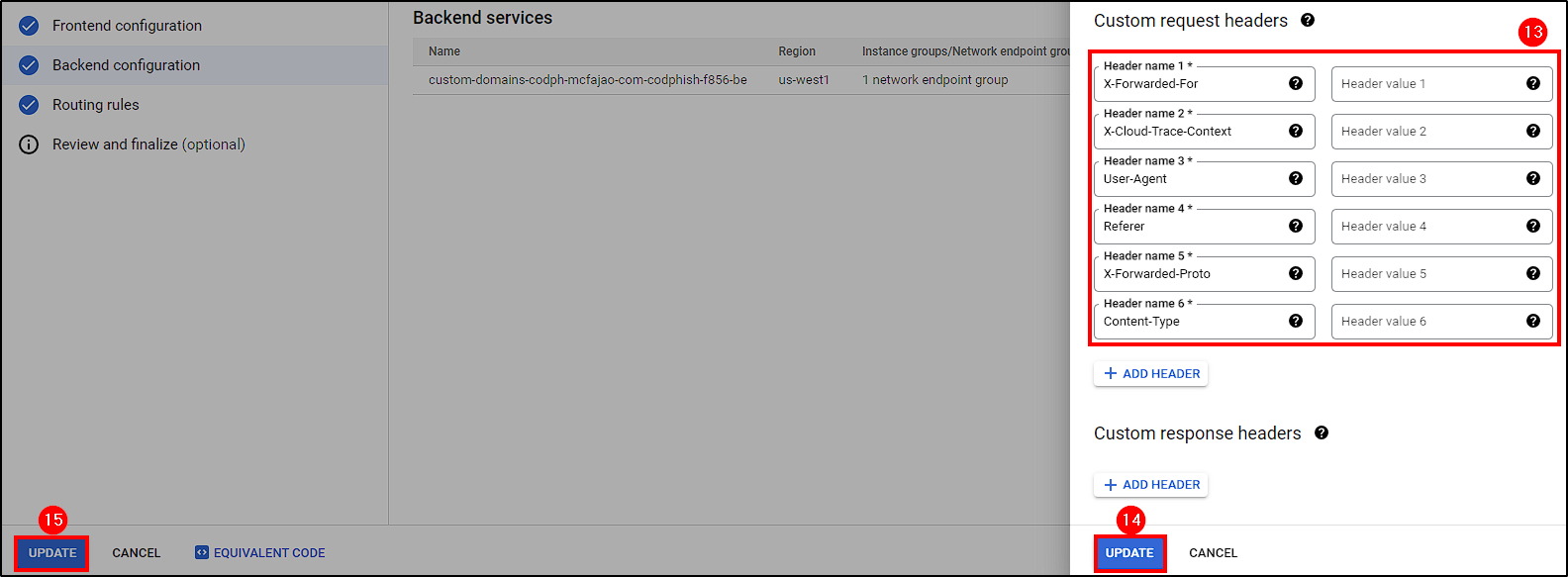

The final step is to make some changes to the custom load balancer. This includes attaching the Cloud Armor Security Policy, enabling logging, and adding custom request headers.

Go to Network Services, select the Load Balancer, and click on Edit. Follow the steps shown in the picture below.

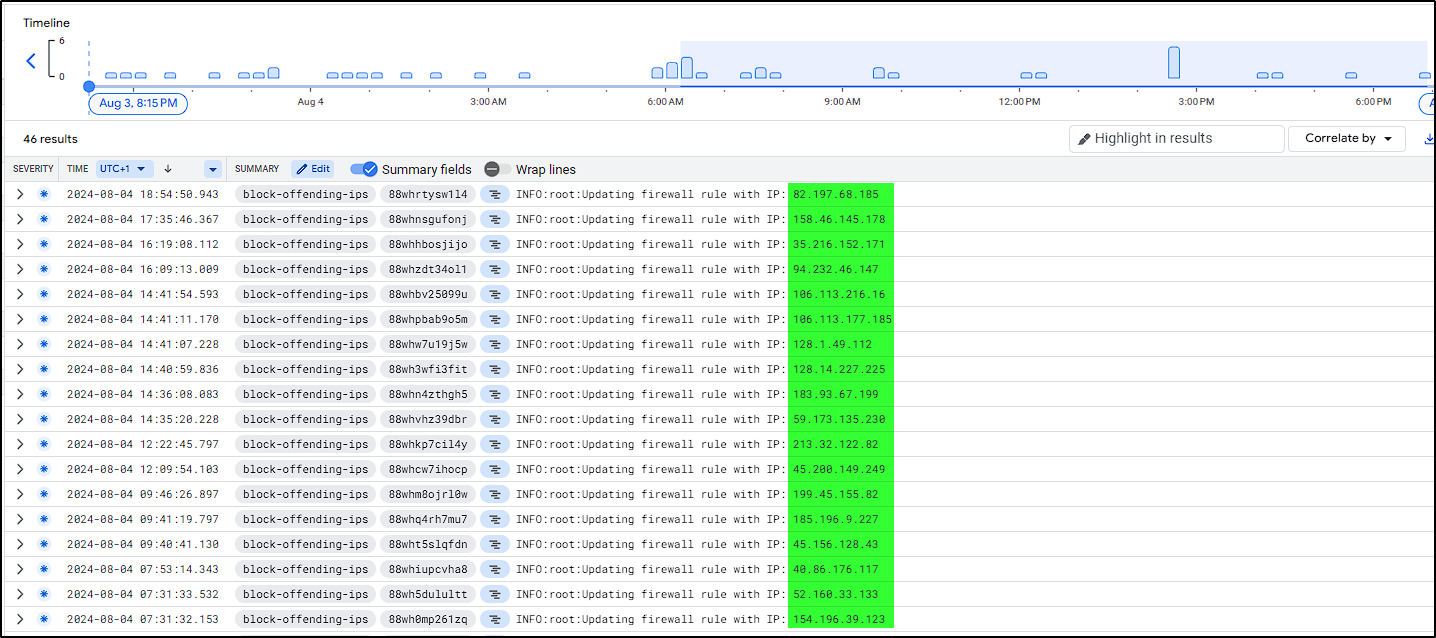

Malicious/Scanners IP Block Automation

With our website running behind the Load Balancer, we will start receiving WARNING logs whenever a Cloud Armor rule gets triggered. Currently, malicious actors only receive a 404 page, but I want to block those IPs in the firewall. Instead of doing this manually, we can use Google Pub/Sub and Google Functions to automate the process.

Pub/Sub - Sink, Topic & Subscription Creation

Our first step is to ingest the load balancer logs and send them to Cloud Functions using the following components:

- Logging Sinks: Routes all Load Balancer logs triggered by a Cloud Armor policy to a Pub/Sub Topic.

- Pub/Sub Topic: Acts as a central point where all logs are collected.

- Pub/Sub Subscription: Ensures that logs published to the topic are delivered to Cloud Function.

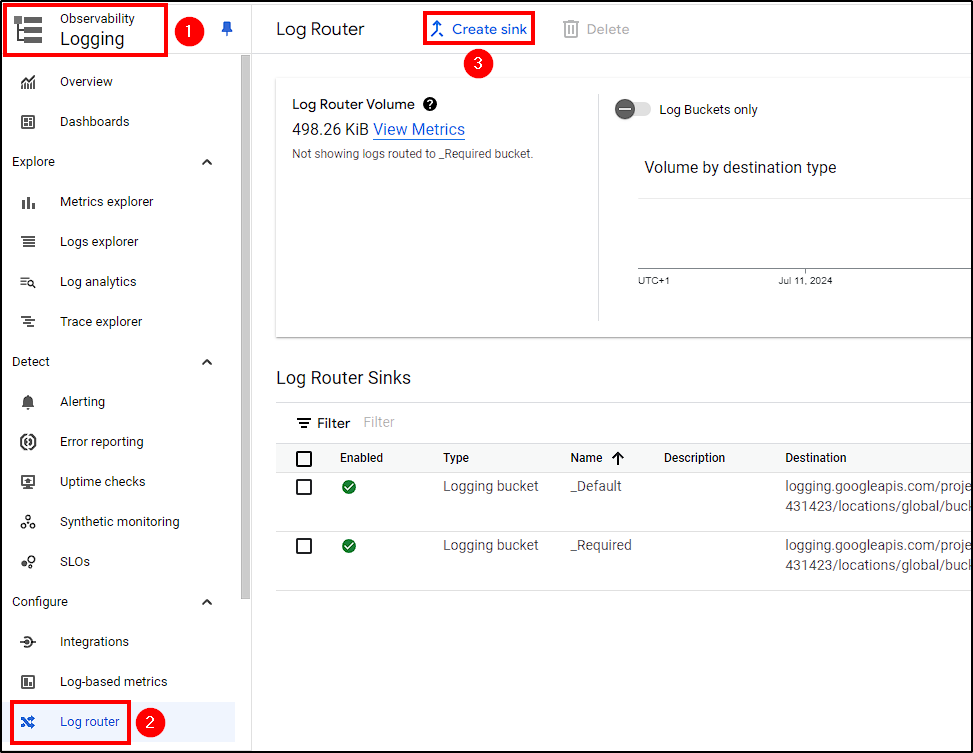

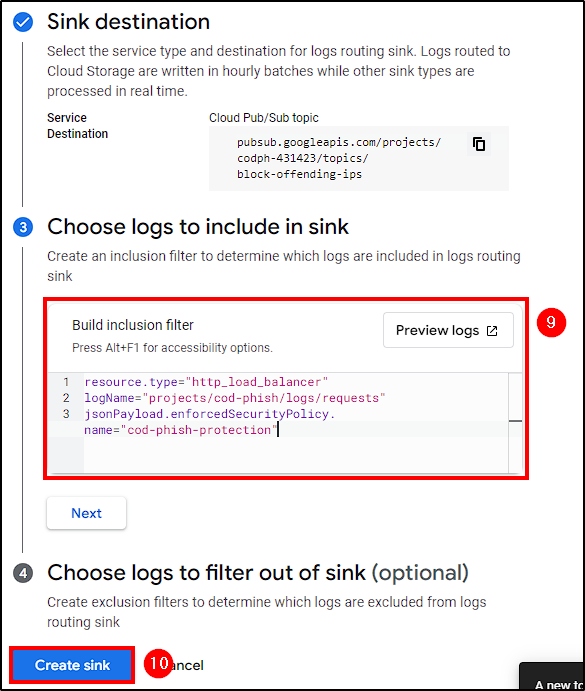

To create our Sink, go to Logging > Log Router > Create Sink and make the following changes:

- Name the sink

- Select

Cloud Pub/Sub topicas the service - Create a topic (Just name it and leave everything as default)

- Create a filter to include only Load Balancer logs triggered by Cloud Armor.

- Select

Create Sink

1

2

3

4

# Filter

resource.type="http_load_balancer"

logName="projects/cod-phish/logs/requests"

jsonPayload.enforcedSecurityPolicy.name="cod-phish-protection"

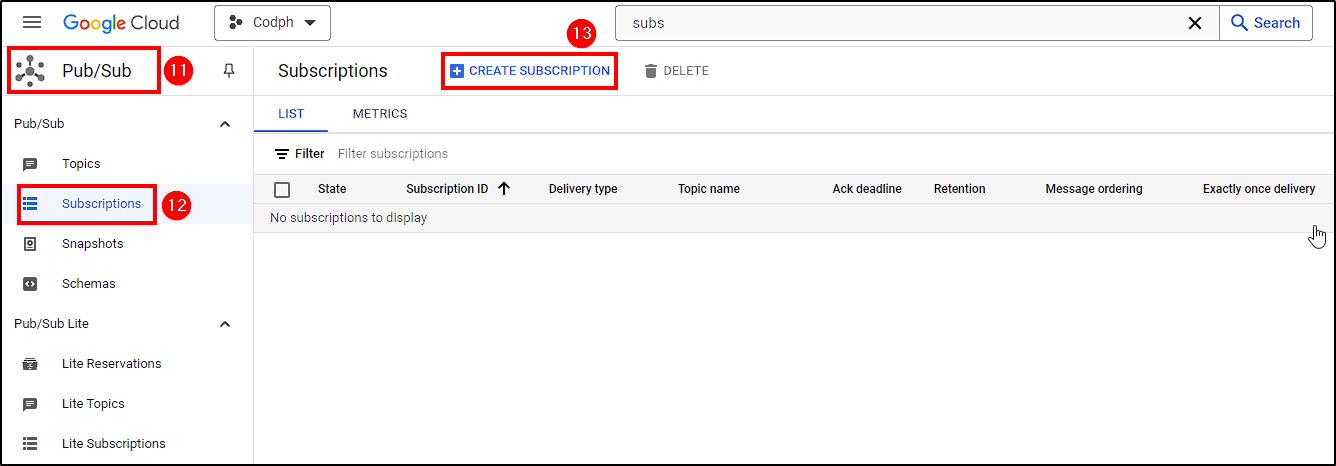

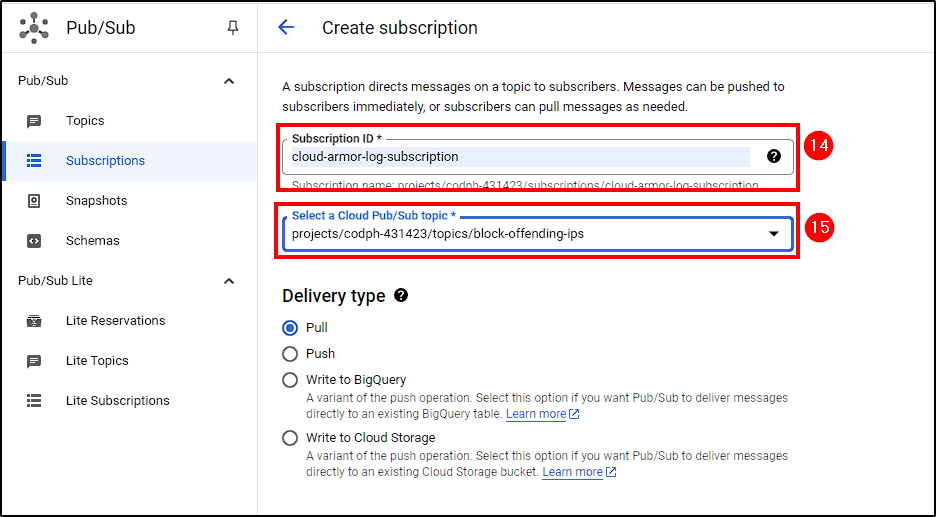

Next, create a subscription. Go to Cloud Pub/Sub > Subscriptions > Create Subscription, name it, select the topic we created earlier, and create the subscription.

Cloud Functions

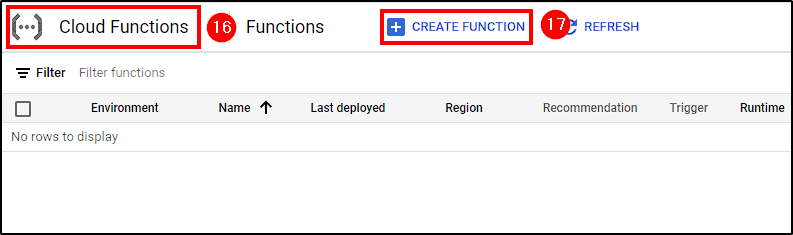

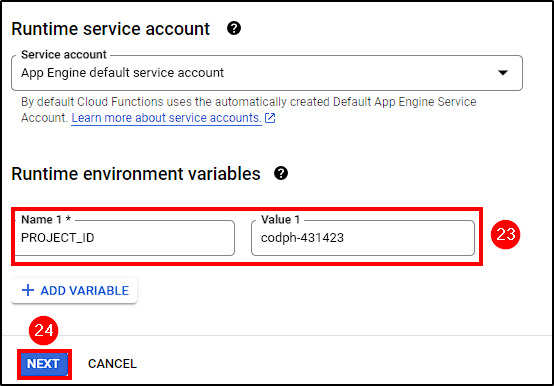

Now that all components are ready, we need to create a Function that will filter the IPs from the logs sent from Cloud Pub/Sub and create a Firewall rule to block them. Go to Cloud Functions > Create Function and follow these steps:

- Select

1st gen Environment - Name your function and select the region

- Select the Topic created earlier as the trigger and save it

- Add the environment variable PROJECT_ID and insert your project id in the value input

- Select

Next

Ensure the App Engine service account has the Compute Security Admin role for the function to work.

Next, add our function code. Ensure to switch to Python 3.9 and that the entry-point matches the function name.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

import base64

import json

import os

import logging

import ipaddress

from googleapiclient.discovery import build

from googleapiclient.errors import HttpError

def block_offending_ips(event, context):

logging.basicConfig(level=logging.INFO)

compute = build('compute', 'v1')

project_id = os.environ.get('PROJECT_ID', 'codph-431423')

firewall_rule_name = 'block-offending-ips'

pubsub_message = base64.b64decode(event['data']).decode('utf-8')

log_entry = json.loads(pubsub_message)

offending_ip = None

if 'jsonPayload' in log_entry and 'enforcedSecurityPolicy' in log_entry.get('jsonPayload', {}):

enforced_policy = log_entry['jsonPayload']['enforcedSecurityPolicy']

if 'name' in enforced_policy and enforced_policy['name'] == 'codph-security': # CHANGE TO YOUR CLOUD ARMOR SECURITY POLICY!

if 'request' in log_entry.get('jsonPayload', {}) and 'headers' in log_entry['jsonPayload']['request']:

offending_ip = log_entry['jsonPayload']['request']['headers'].get('x-forwarded-for')

if not offending_ip and 'httpRequest' in log_entry:

offending_ip = log_entry['httpRequest'].get('remoteIp')

if not offending_ip or not ipaddress.ip_address(offending_ip):

return 'No valid IP found to block', 400

logging.info(f"Attempting to block IP: {offending_ip}")

try:

firewall_rule = compute.firewalls().get(project=project_id, firewall=firewall_rule_name).execute()

except HttpError as e:

if e.resp.status == 404:

firewall_rule = None

else:

return f'Error getting firewall rule: {str(e)}', 500

try:

if firewall_rule:

existing_ips = firewall_rule.get('sourceRanges', [])

if offending_ip not in existing_ips:

existing_ips.append(offending_ip)

firewall_rule['sourceRanges'] = existing_ips

compute.firewalls().update(project=project_id, firewall=firewall_rule_name, body=firewall_rule).execute()

else:

firewall_rule_body = {

"name": firewall_rule_name,

"network": "global/networks/default",

"sourceRanges": [offending_ip],

"denied": [{"IPProtocol": "tcp"}, {"IPProtocol": "udp"}],

"direction": "INGRESS",

"priority": 1000,

"description": "Block offending IPs detected by Cloud Armor"

}

compute.firewalls().insert(project=project_id, body=firewall_rule_body).execute()

return 'Firewall rule updated or created', 200

except HttpError as e:

return f'Error updating/creating firewall rule: {str(e)}', 500

1

2

google-api-python-client==2.82.0

google-cloud-pubsub==2.12.0

Deploy it, and that’s it! Our function will start creating firewall rules to ban IPs based on the Load Balancer logs.

Final Thoughts

Working on this project was a valuable learning experience, teaching me a lot about Google Cloud’s capabilities and services. There were many times I had to study the documentation to troubleshoot and configure everything correctly. This project has motivated me to continue learning about cloud computing and explore other cloud providers. I’m excited to keep improving my skills and apply what I’ve learned to future projects. Thank you for following along with my journey.